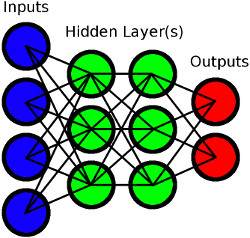

Before you can create an instance of a neural network, you will need to know what your network topology looks like. That is, how many inputs, how many outputs, how many hidden layers you would like, and how many neurons in each hidden layer. Hidden layers will generally have a number of neurons between the number of inputs and number of outputs. You generally only need one hidden layer for simple tasks and two for more complex tasks. For example, if you have 10 inputs and 2 outputs, you might consider one hidden layer of 4-6 neurons and may want to try experimenting to see which gives the best results.

In a neural network, the "inputs" will be numerical values that represent some information to be processed and the "outputs" will be numerical values that (attempt to) represent the desired outcome it has been trained to do. You can think of hidden layers as a temporary work area to do the processing as data flows from the inputs, through hidden layers, to the outputs. The more layers and neurons per layer you have, the more computationally expensive it will be to gather results from your network.

Once you have constructed your neural network, you will be stuck with that topology as changing the topology would invalidate any training done on it.

When you create a neural network, you must train it before it can be useful. A fresh neural network is initialized with a randomized pattern. Each individual sample that is back-propagated into a neural network will attempt to pull the values towards their goal; with enough training, the pattern will begin to resemble a mathematical function that can compute whatever task you assign to it.

How much training you need for your task depends entirely on the topology of your network and how complex the ask is. Some simpler networks might only require a few hundred training samples while complicated networks may require millions. This network library is fairly limited in that respect; very complex tasks (such as image recognition) are beyond the scope of this library and it is recommended to use something like TensorFlow instead.

The Neural Network library is optional. In order to use this library, you must require it in one of your source files (preferably main.lua) prior to use. It only needs to be required once in a single file. It is recommended you do this at the top of your main.lua file, outside of any functions or other code, and you may do this by using this code:

You may either pass a

This function is used "feed" data into the network's inputs and perform calculations on it. You

will use this both while training and for getting results once training is complete.

This function accepts either a variable number of arguments or

The number of values that you pass in must be exactly the same number of inputs for the network.

Back Propagation is one method of training neural networks. In this type of training, you will feed data to a network and then "back propagate" the known, expected result. With each back propagation, the neural network will continue to rearrange its hidden parts to understand what it is expected to do and, therefor, give better results. Note that back propagating once or twice will be meaningless; you will almost certainly need to provide thousands of training samples.

You may pass values as a

Returns the network's recent average error (aka RMS); this describes roughly how well the network is learning. A value of ~1.0 indicates that the network is likely under-trained and seems to be learning fast but probably still giving poor results. A value near to ~0.0 indicates that the training is ineffective at further training; this may be because the network is already sufficiently trained, or because the training data itself isn't very efficient.

Returns the results (values in the output layer) as a

The number of values returned will exactly match the size of the network's output layer.

Returns a

You probably should just use NeuralNet:save() to save it directly to a file.

Saves the neuron weights to a file. Use this after training so that you can re-load it later and not have to start over with training your network.

Loads the neuron weights back into a network from a file that was saved with NeuralNet:save()

The network is completely re-created

This function both modifies the current object and returns itself for your convenience. This way, you can either create a new network and load directly on it, or use the return result from NeuralNet:load().

In this example, we will create a neural network and train it to calculate for xor. Xor (eXclusive Or) simply means that input 1 OR input 2 must be true(1) but not both at the same time. 1 xor 0 is true(1), 0 xor 1 is true(1), 1 xor 1 is false(0), 0 xor 0 is false(0)

Since Lua already provides a way to do this, a neural network is way overkill and computationally expensive in comparison, however it should make a good example.

Page last updated at 2018-10-02 22:26:10